What actually is robots.txt file? How to create/make robots.txt file? Add SEO friendly custom robots.txt file in Blogger

Do you want to create your own robots.txt file for your Blogger Blog? If yes, this post will guide you in easy straight foeward steps. Here I will discuss about what robots.txt file is and how to add custom robots.txt file in Blogger. Recently Blogger has added an option called search preferences into Blogger dashboard that will allow you to add custom robots header tags and custom robots.txt file.

I will highly recommend you to know completely about robots.txt file before makes any changes into this file because robots.txt file is all about crawling and indexing of your entire blog so if anything goes wrong may harm your SEO. Now, first we will learn about robots.txt file.

What is robots.txt?

Robots.txt file iassociates with all types of crawlers or spiders like Googlebot, which is Google search engine's spider. In simple words, search engines always want to index the fresh and new content on the web so they send their spider or crawler to crawl the new pages on the web.

If the spiders find new pages they will likely to index those pages, now robots.txt file comes to the picture on sake of you, spiders or crawlers only index the pages you are allowing in your robots.txt file.

Keep in your mind, the crawler or spider will first look at your robots.txt files to obey the rules you have instructed. If you have disallowed a page in your robots.txt file then spiders will follow the rule and they will not index that page into their search engine.

Where is robots.txt file Situated?

Robots.txt file placed on the root directory of any websites or blogs. The spiders have no doubt to where to go for finding robots.txt file. If you don't have any robots.txt file into the root directory of your domain then crawlers by default crawl and index each pages of your blog.

In WordPress, you can easily create your robots.txt file and place it on the root directory by your control panel's file manager or by using any FTP client like filezilla.

In Blogger, You can not have any option to go into the root directory of your blogspot blog or custom Blogger domain. Now, thanks to Google who recently add search preferences option by which you can easily edit your meta header tags, robot tags, nofollow and dofollow tags for different page types. We will use this option to create custom robots.txt file. Before creating robots.txt file, you must know the rules because any improper use can harm your site SEO.

You can access your robots.txt file by adding /robots.txt to the root directory of your blog or website. If your website domain URL is "www.yourdomain.com" then you have to access your robots.txt file by "www.yourdomain.com/robots.txt" URL.

If you have a blogspot domain then you can access it by "yourdomain.blogspot.com/robots.txt" URL. You may also check my robots.txt file.

How to Create Robots.txt file

Creating robots.txt file is really simple just you have to know some rules as I am going to discuss in the following ways.

"If you have a WordPress blog, you can create a text document on your desktop and rename it to robots.txt. You are ready to go with an empty robots.txt file. You can upload it to the root directory of your domain. You may create rules as per your need by writing some text into this file"

"If you have a Blogger blog, now it is more easy than WordPress to create your robots.txt file. I will discuss it later but before I want to discuss about the rules and what kind of commands you can write in this file"

First thing, if your domain doesn't have any robots.txt file, it means you are allowing to crawl and index all of your blog pages to each spiders or crawlers.

Second, let you have a robots.txt file which is blank, it also means you are allowing to crawlers to index all the pages of your website.

Third, let you have a robtos.txt file and write the following two lines in it.

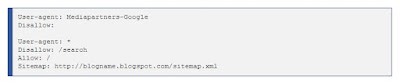

Here "User-agent" means all kind of crawlers or spiders, it may be Google's crawler such as Googlebot for search engine or Mediapartner-Google for Google adsense. In the above robots.txt file, I have used "*" as a value in User-agent. It means we are creating the rules for global crawlers or spiders or robots.

In the second line, we are allowing everything to crawled and index by crawlers. Don't be confused! ("Disallow: "is same as "Allow: /" and it is not same as "Disallow: /"). If you don't have any value in "Disallow: " it means you are allowing everything to crawl and index while if you have "/" value into disallow as "Disallow: /" you are disallowing everything to crawl and index by the spiders.

Now, a quick cheat sheet is as following to understand the rules.

Block all content from all web crawlers

Allow all content to all web crawlers

Block a specific web spider from a specific page

Block a directory from all web crawlers

In the third line, all things inside "p" directory and sub-directory would be blocked to all web crawlers. If you have a Blogger blog and you have created the same rule in your robots.txt file it means you are disallowing all your static pages to be crawl by any spiders.

Allow an item to a blocked directory from Googlebot

I think, you have learned that how to deal with robots.txt file. You should use robots.txt file to disallow the pages that create duplicate content issue such as monthly or weekly archive pages have the same contents. One more thing, do you really want your blog get top ranking when someone search for the privacy policy or disclaimer policy. If no, then these pages should not be index in search engines, and search engines can not index such types of page if you had disallowed them.

Since robots.txt file indicates what to crawl and index from your website, and all the web crawlers visit on your robots.txt file before visiting on your website so there should be an option to include your sitemap into your robots.txt file. Yes, it is so that the spiders can do easily calculate and reach to every page into your website.

Showing sitemap in robots.txt file

This is a XML sitemap for Blogger.

SEO friendly robots.txt file in Blogger

Now, copy all the text in the above code. we will upload this into our robots.txt file in Blogger.

Go to Blogger Dashboard -> Select your blog -> Settings -> Search preferences

Under "Crawlers and indexing", enable custom robots.txt content by clicking on "Yes" now paste the copied content here as mention in the preview. Now, finally click on Save changes. You have successfully created your custom robots.txt file for your Blogger.

You can now check robots.txt file of your blog by the desired URL as mention earlier in this post. If you have disallowed some specific pages via your robots.txt file, you may check it via Google webmaster tool under robots.txt tester by choosing your site URL.

Conclusion: robots.txt file is beneficial for those who don't want to share specific things to search engines or other robots. You may resolve duplicate content issue by using it.

Positive site, where did u come up with the information on this posting? I'm pleased I discovered it though, ill be checking back soon to find out what additional posts you include. seo services for business

ReplyDeleteThank you so much for sharing this great blog.Very inspiring and helpful too.Hope you continue to share more of your ideas.I will definitely love to read.

ReplyDelete